Overview

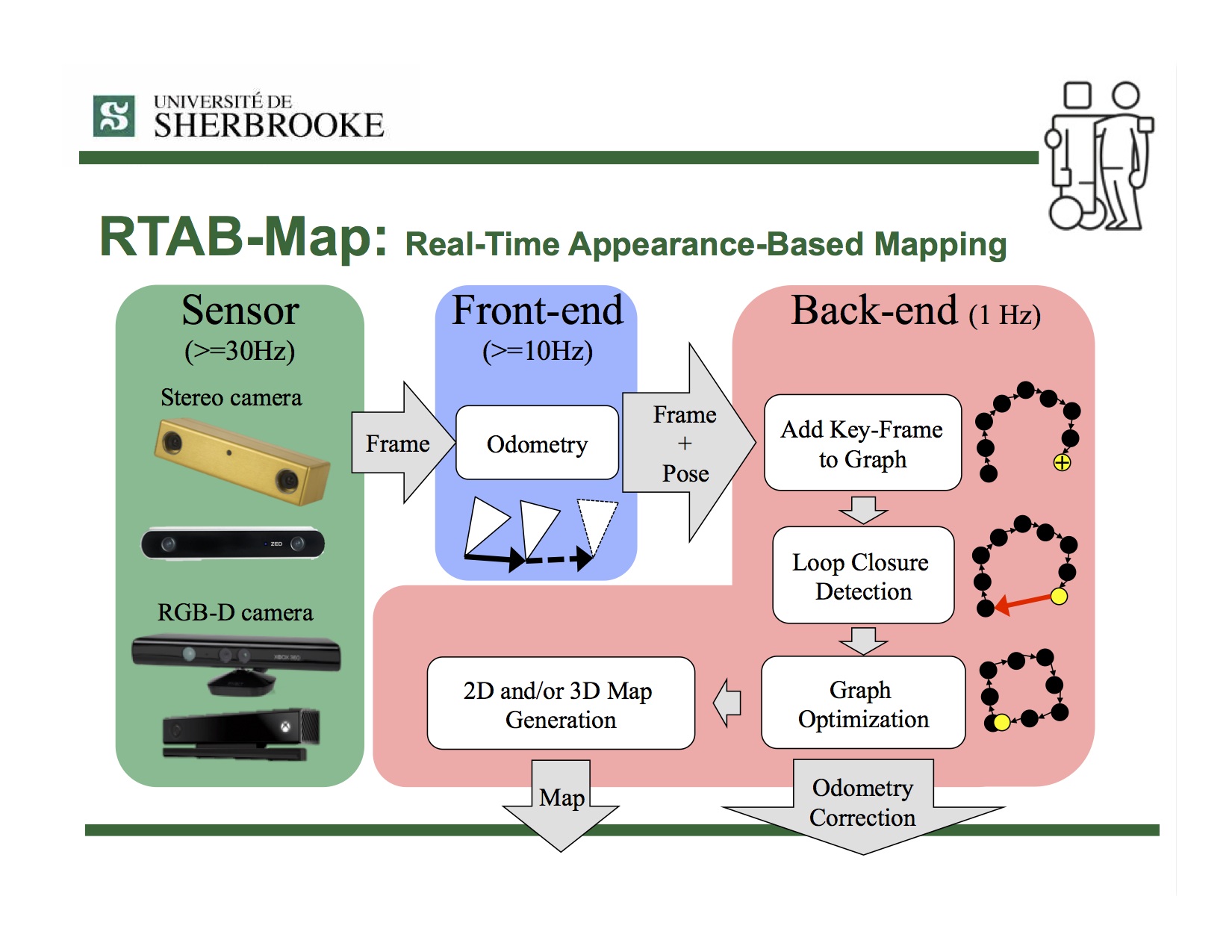

RTAB-Map (Real-Time Appearance-Based Mapping) is a RGB-D, Stereo and Lidar Graph-Based SLAM approach based on an incremental appearance-based loop closure detector. The loop closure detector uses a bag-of-words approach to determinate how likely a new image comes from a previous location or a new location. When a loop closure hypothesis is accepted, a new constraint is added to the map’s graph, then a graph optimizer minimizes the errors in the map. A memory management approach is used to limit the number of locations used for loop closure detection and graph optimization, so that real-time constraints on large-scale environnements are always respected. RTAB-Map can be used alone with a handheld Kinect, a stereo camera or a 3D lidar for 6DoF mapping, or on a robot equipped with a laser rangefinder for 3DoF mapping.

Illumination-Invariant Visual Re-Localization

- M. Labbé and F. Michaud, “Multi-Session Visual SLAM for Illumination-Invariant Re-Localization in Indoor Environments,” in Frontiers in Robotics and AI, vol. 9, 2022. (Frontiers) (Dataset link) (Google Scholar)

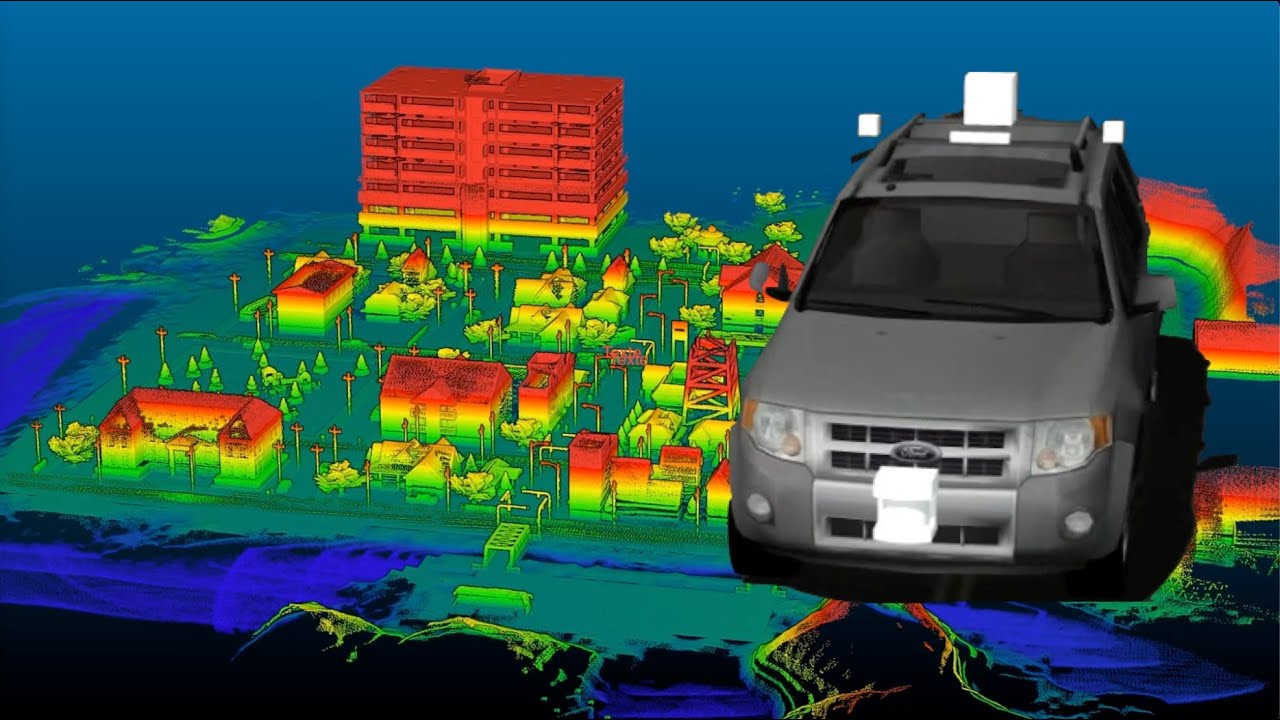

Lidar and Visual SLAM

- M. Labbé and F. Michaud, “RTAB-Map as an Open-Source Lidar and Visual SLAM Library for Large-Scale and Long-Term Online Operation,” in Journal of Field Robotics, vol. 36, no. 2, pp. 416–446, 2019. (Wiley) (Google Scholar)

Simultaneous Planning, Localization and Mapping (SPLAM)

- M. Labbé and F. Michaud, “Long-term online multi-session graph-based SPLAM with memory management,” in Autonomous Robots, vol. 42, no. 6, pp. 1133-1150, 2018. (Springer) (Google Scholar)

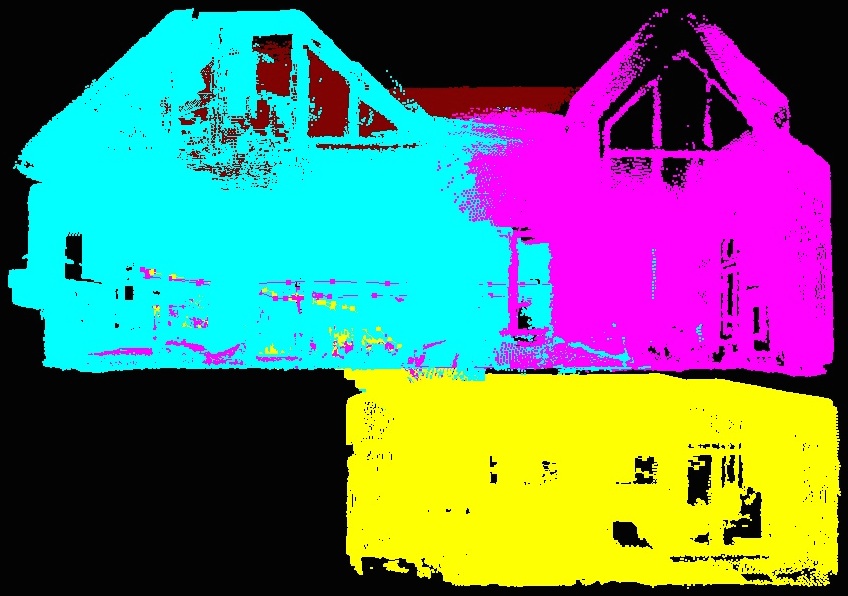

Multi-session SLAM

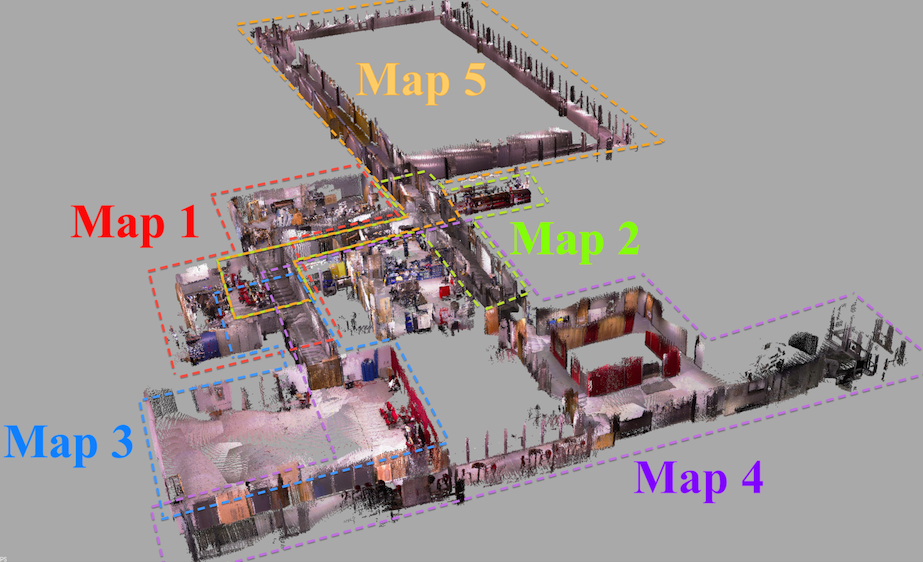

- M. Labbé and F. Michaud, “Online Global Loop Closure Detection for Large-Scale Multi-Session Graph-Based SLAM,” in Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 2014. (IEEE Xplore) (Google Scholar)

- Results shown in this paper can be reproduced by the Multi-session mapping tutorial.

Loop closure detection

- M. Labbé and F. Michaud, “Appearance-Based Loop Closure Detection for Online Large-Scale and Long-Term Operation,” in IEEE Transactions on Robotics, vol. 29, no. 3, pp. 734-745, 2013. (IEEE Xplore) (Google Scholar)

- M. Labbé and F. Michaud, “Memory management for real-time appearance-based loop closure detection,” in Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, 2011, pp. 1271–1276. (IEEE Xplore) (Google Scholar)

- Visit RTAB-Map’s page on IntRoLab for detailed information on the loop closure detection approach and related datasets.

- Visit this page for usage example of the CLI tool that van be used to evaluate only RTAB-Map’s loop closure detector on new datasets.

Install

- Installation instructions.

- Tutorials.

- Tools.

- For ROS users, take a look to rtabmap page on the ROS wiki for a package overview. See also SetupOnYourRobot to know how to integrate RTAB-Map on your robot.

Troubleshooting

Standalone

- Visit the wiki.

- Ask a question on RTAB-Map Forum (New address! August 9, 2021).

- Post an issue on GitHub

- For the loop closure detection approach, visit RTAB-Map on IntRoLab website

- Enabled Github Discussions (New! November 2022)

ROS

- Visit rtabmap_ros wiki page for nodes documentation, demos and tutorials on ROS.

- Ask a question on

answers.ros.orgrobotics.stackexchange.com with rtabmap or rtabmap-ros tag.

License

- If OpenCV is built without the nonfree module, RTAB-Map can be used under the permissive BSD License.

- If OpenCV is built with the nonfree module, RTAB-Map is free for research only because it depends on SURF features. SURF is not free for commercial use. Note that SIFT patent has expired, so it can be a good free equivalent of SURF.

- SURF noncommercial notice: http://www.vision.ee.ethz.ch/~surf/download.html

Privacy Policy

RTAB-Map App on Google Play Store or Apple Store requires access to camera to record images that will be used for creating the map. When saving, a database containing these images is created. That database is saved locally on the device (on the sd-card under RTAB-Map folder). While location permission is required to install RTAB-Map Tango, the GPS coordinates are not saved by default, the option “Settings->Mapping…->Save GPS” should be enabled first. RTAB-Map requires read/write access to RTAB-Map folder only, to save, export and open maps. RTAB-Map doesn’t access any other information outside the RTAB-Map folder. RTAB-Map doesn’t share information over Internet unless the user explicitly exports a map to Sketchfab or anywhere else, for which RTAB-Map needs the network. If so, the user will be asked for authorization (oauth2) by Sketchfab (see their Privacy Policy here).

This website uses Google Analytics. See their Privacy Policy here.

Author

- Mathieu Labbé

- RTAB-Map’s page at IntRoLab

- Papers

- Similar projects: Find-Object

What’s new

December 2024

ROS2 packages overhaul (see ros2 branch): we added new ROS2 demos and examples. We also fixed message_filters related synchronization issues causing significant lags when using ROS2 nodes (in comparison to ROS1). Binaries ros-$ROS_DISTRO-rtabmap-ros will be released under version 0.21.9.

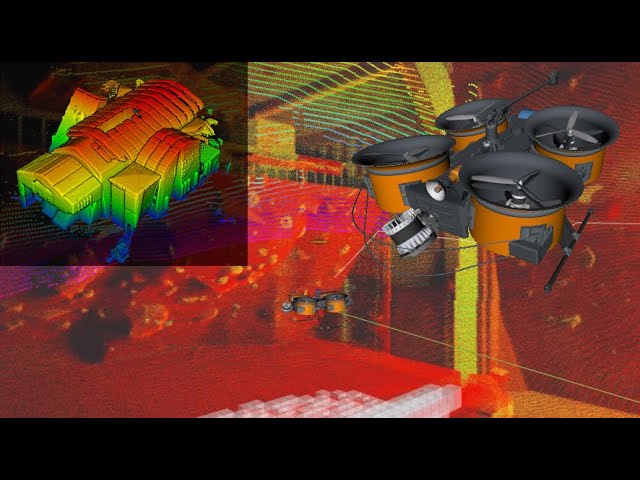

November 2023

We had new papers published this year on a very fun project about underground mines scanning. Here is a video of the SLAM part of the project realized with RTAB-Map (it is an early field test we did before going in the mines):

Related papers:

-

Leclerc, M.A., Bass, J., Labbé, M., Dozois, D., Delisle, J., Rancourt, D. and Lussier Desbiens, A., 2023. “NetherDrone: A tethered and ducted propulsion multirotor drone for complex underground mining stopes inspection”. Drone Systems and Applications. (Canadian Science Publishing) (Editor’s Choice)

-

Petit, L. and Desbiens, A.L., 2022. “Tape: Tether-aware path planning for autonomous exploration of unknown 3d cavities using a tangle-compatible tethered aerial robot”. IEEE Robotics and Automation Letters, 7(4), pp.10550-10557. (IEEE Xplore)

March 2023

New release v0.21.0!

June 2022

A new paper has been published: Multi-Session Visual SLAM for Illumination-Invariant Re-Localization in Indoor Environments. The general idea is to remap multiple times the same environment to capture multiple illumination variations caused by natural and artificial lighting, then the robot would be able to localize afterwards at any hour of the day. For more details, see this page and the linked paper. Some great comparisons about robustness to illumination variations between binary descriptors (BRIEF/ORB, BRISK), float descriptors (SURF/SIFT/KAZE/DAISY) and learned descriptors (SuperPoint).

January 2022

Added demo for car mapping and localization with CitySim simulator and CAT Vehicle:

December 2021

Added indoor drone visual navigation example using move_base, PX4 and mavros:

More info on the rtabmap-drone-example github repo.

June 2021

-

I’m pleased to announce that RTAB-Map is now on iOS (iPhone/iPad with LiDAR required). The app is available on App Store.

December 2020

New release v0.20.7!

August 2020

New release v0.20.3!

July 2020

New release v0.20.2!

November 2019

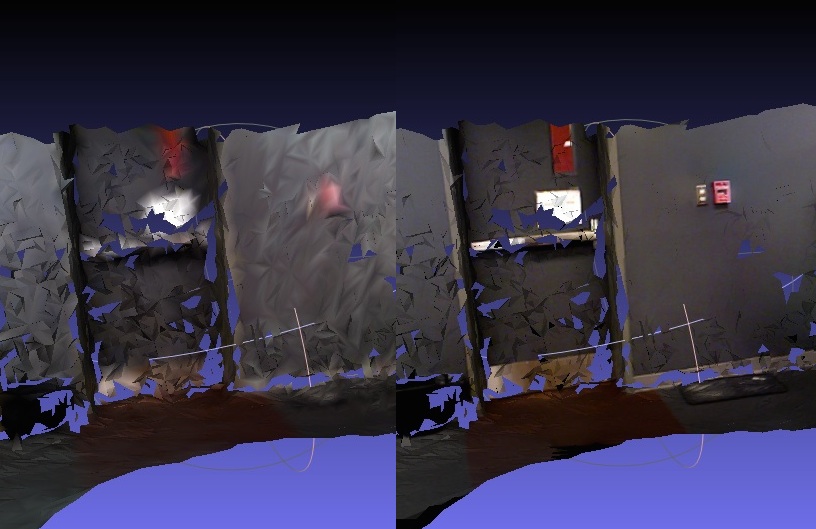

- AliceVision library has been integrated to RTAB-Map to provide higher texture quality. Compare the updated version of the Ski Cottage on Sketchfab with the old version. Look at how the edges between camera texures are smoother (thx to multi-band blending), decreasing significantly the sharp edge artifacts. Multi-band blending approach can be enabled in File->Export Clouds dialog under Texturing section. RTAB-Map should be built with AliceVision support (CUDA is not required as only texture pipeline is used). A patch is required to avoid problems with Eigen, refer to Docker file here.

September 2017

- New version 0.14 of RTAB-Map Tango with GPS support. See it on play store.

July 2017

- New version 0.13 of RTAB-Map Tango. See it on play store.

-

I uploaded a presentation that I did in 2015 at Université Laval in Québec! A summary of RTAB-Map as a RGBD-SLAM approach:

March 2017

-

New tutorial: Multi-Session Mapping with RTAB-Map Tango

February 2017

- Version 0.11.14 : Visit the release page for more info!

- Tango app also updated:

October 2016

-

Application example: See how RTAB-Map is helping nuclear dismantling with Orano’s MANUELA project (Mobile Apparatus for Nuclear Expertise and Localisation Assistance):

-

Version 0.11.11: Visit the release page for more info!

July 2016

- Version 0.11.8: Visit the release page for more info!

June 2016

- Version 0.11.7: Visit the release page for more info!

February 2016

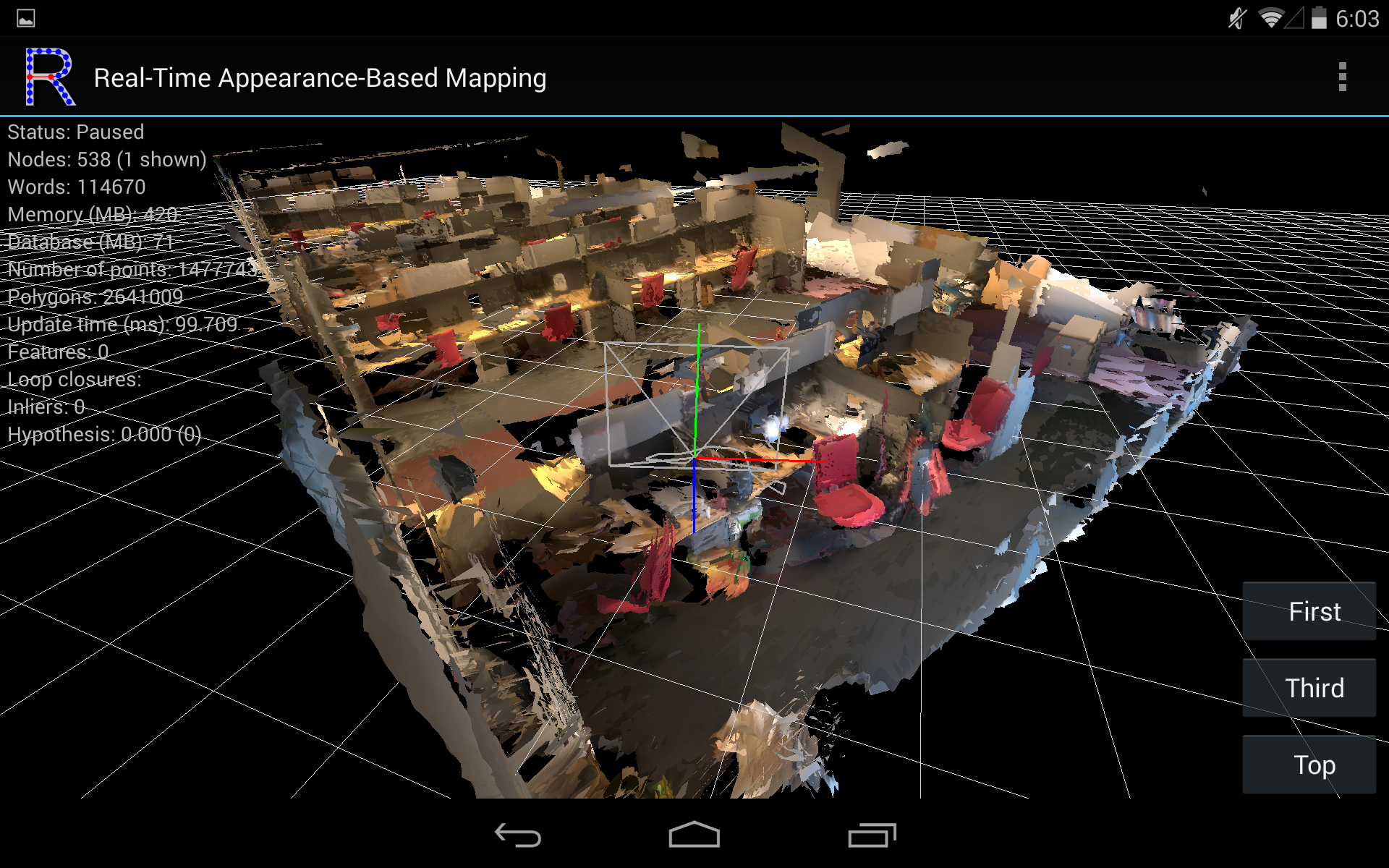

-

I’m pleased to announce that RTAB-Map is now on Project Tango. The app is available on Google Play Store.

- Screenshots:

October 2015

- Version 0.10.10: Visit the release page for more info!

September 2015

-

Version 0.10.6: Integration of a robust graph optimization approach called Vertigo (which uses g2o or GTSAM), see this page:

August 2015

-

Version 0.10.5: New example to export data to MeshLab in order to add textures on a created mesh with low polygons, see this page:

October 2014

-

New example to speed up RTAB-Map’s odometry, see this page:

September 2014

-

At IROS 2014 in Chicago, a team using RTAB-Map for SLAM won the Kinect navigation contest held during the conference. See their press release for more details: Winning the IROS2014 Microsoft Kinect Challenge. I also added the Wiki page IROS2014KinectChallenge showing in details the RTAB-Map part used in their solution.

August 2014

-

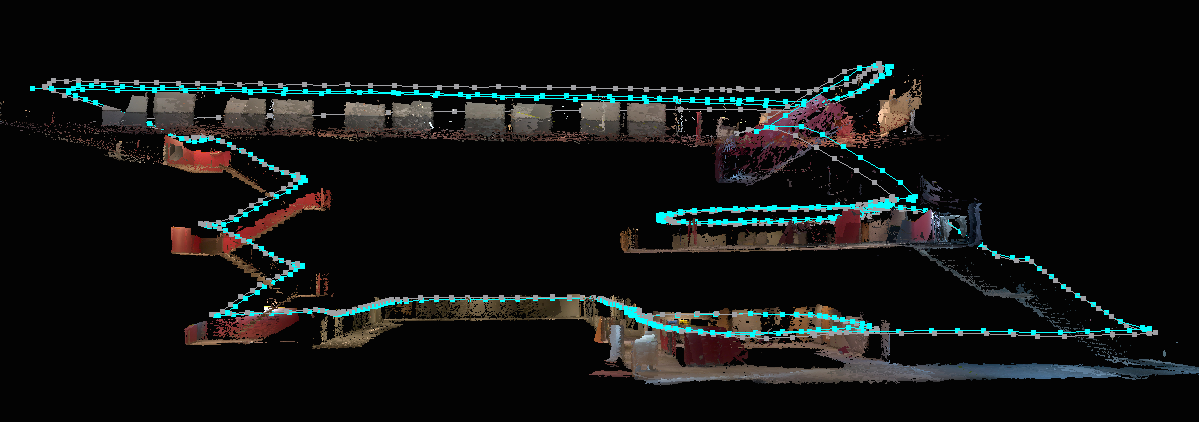

Here a comparison between reality and what can be shown in RVIZ (you can reproduce this demo here):

July 2014

-

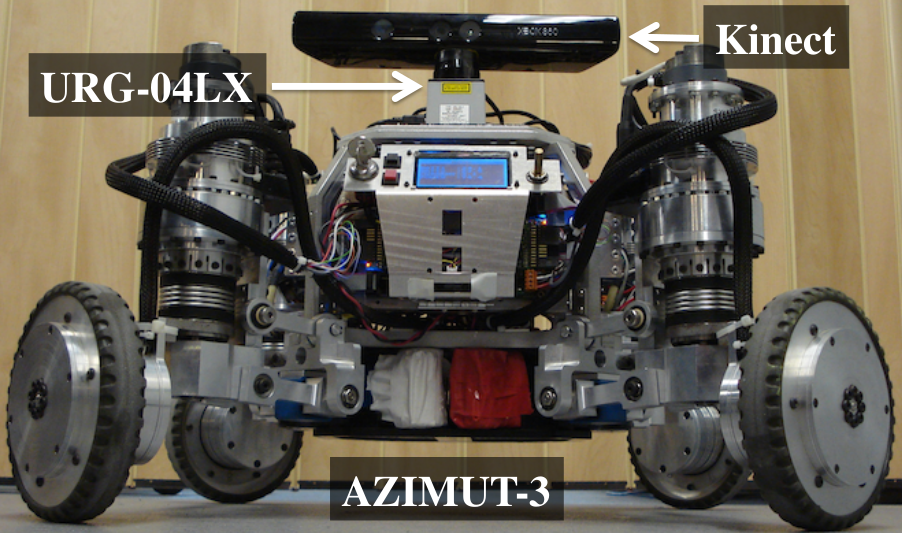

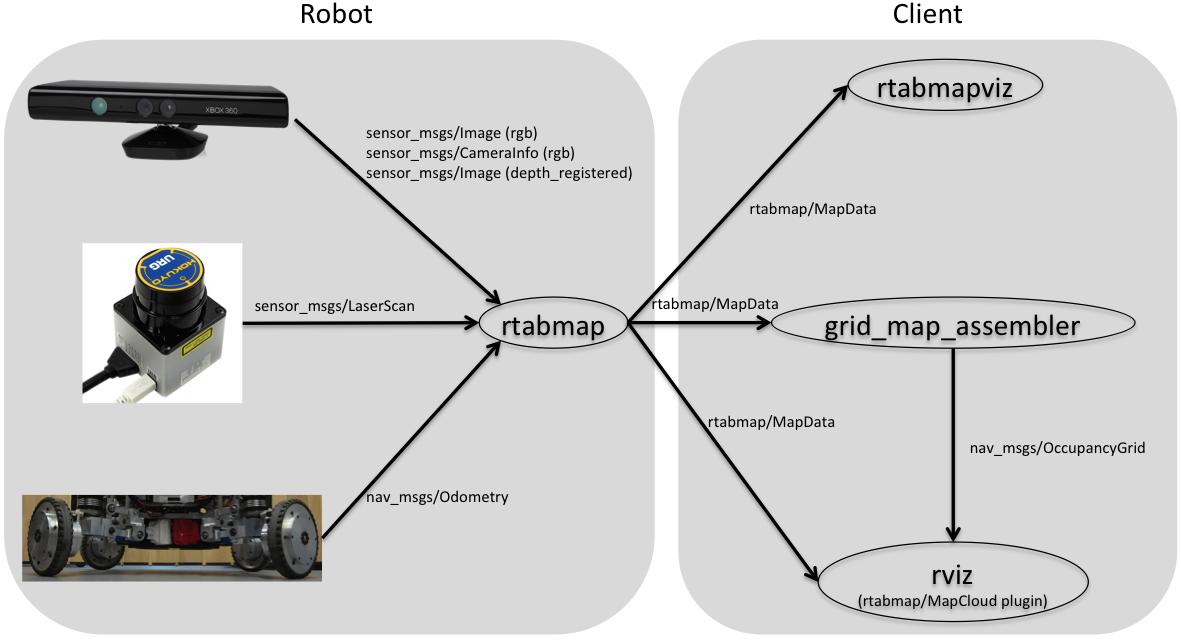

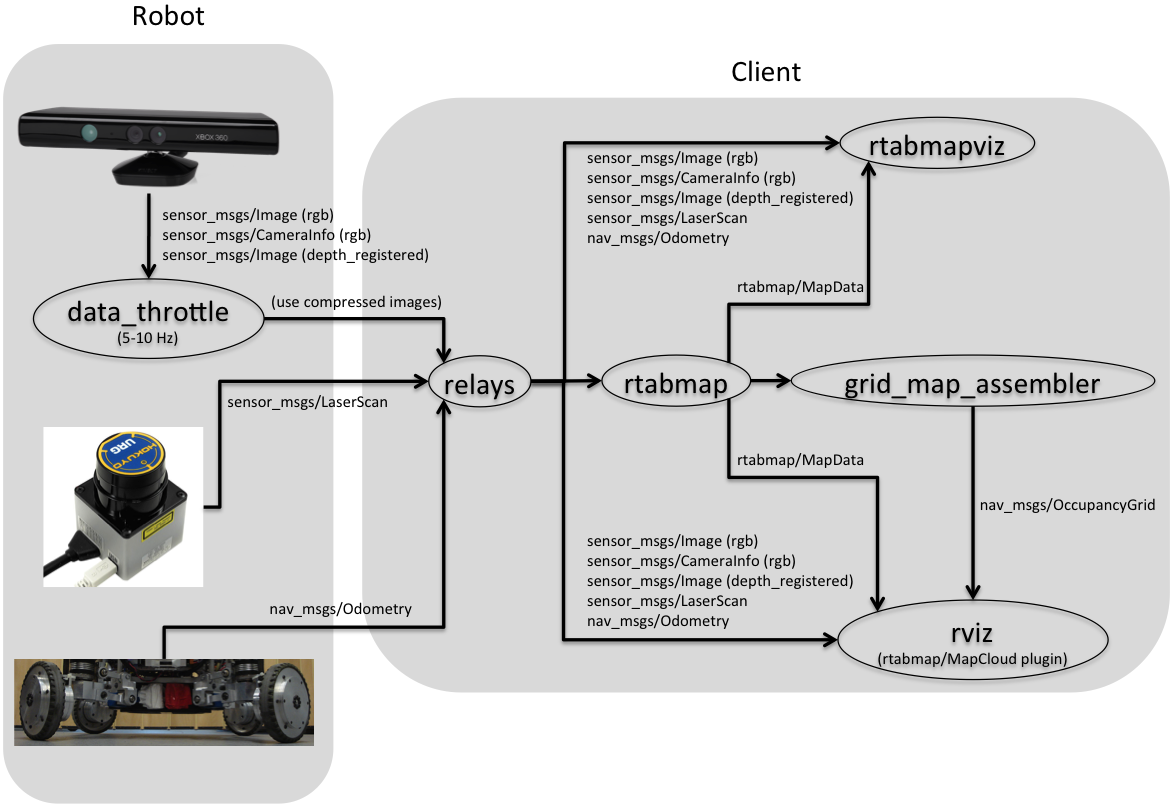

Added Setup on your robot wiki page to know how to integrate RTAB-Map on your ROS robot. Multiple sensor configurations are shown but the optimal configuration is to have a 2D laser, a Kinect-like sensor and odometry.

Onboard mapping Remote mapping

June 2014

-

I’m glad to announce that my paper submitted to IROS 2014 was accepted! This paper explains in details how RGB-D mapping with RTAB-Map is done. Results shown in this paper can be reproduced by the Multi-session mapping tutorial:

Videos

-

RGBD-SLAM

-

Appearance-based loop closure detection

-

More loop closure detection videos here.