Represents a video source that can be added to a WebRTC call. More...

#include <VideoSource.h>

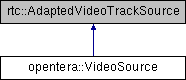

Inheritance diagram for opentera::VideoSource:

Public Member Functions | |

| VideoSource (VideoSourceConfiguration configuration) | |

| Creates a VideoSource. More... | |

| DECLARE_NOT_COPYABLE (VideoSource) | |

| DECLARE_NOT_MOVABLE (VideoSource) | |

| void | sendFrame (const cv::Mat &bgrImg, int64_t timestampUs) |

| Sends a frame to the WebRTC transport layer. More... | |

| bool | is_screencast () const override |

| Indicates if this source is screencast. More... | |

| absl::optional< bool > | needs_denoising () const override |

| Indicates if this source needs denoising. More... | |

| bool | remote () const override |

| Indicates if this source is remote. More... | |

| webrtc::MediaSourceInterface::SourceState | state () const override |

| Indicates if this source is live. More... | |

| void | AddRef () const override |

| rtc::RefCountReleaseStatus | Release () const override |

Detailed Description

Represents a video source that can be added to a WebRTC call.

Pass a shared_ptr to an instance of this to the StreamClient and call sendFrame for each of your frame.

Constructor & Destructor Documentation

◆ VideoSource()

|

explicit |

Creates a VideoSource.

- Parameters

-

configuration The configuration applied to the video stream by the image transport layer

Member Function Documentation

◆ is_screencast()

|

inlineoverride |

Indicates if this source is screencast.

- Returns

- true if this source is a screencast

◆ needs_denoising()

|

inlineoverride |

Indicates if this source needs denoising.

- Returns

- true if this source needs denoising

◆ remote()

|

inlineoverride |

Indicates if this source is remote.

- Returns

- Always false, the source is local

◆ sendFrame()

| void VideoSource::sendFrame | ( | const cv::Mat & | bgrImg, |

| int64_t | timestampUs | ||

| ) |

Sends a frame to the WebRTC transport layer.

The frame may or may not be sent depending of the transport layer state Frame will be resized to match the transport layer request

- Parameters

-

bgrImg BGR8 encoded frame data timestampUs Frame timestamp in microseconds

◆ state()

|

inlineoverride |

Indicates if this source is live.

- Returns

- Always kLive, the source is live

The documentation for this class was generated from the following files:

- VideoSource.h

- VideoSource.cpp